I want to start out by observing that no, you are not crazy or overreacting — the corporate world very much does have a rotten, putrescent core that literally loses sleep over the idea that anyone, anywhere has got any money they might be able to get their hands on. This is not the normal state of affairs, though; these are very, very sick people who have wormed their way into everything and often manage to get themselves into positions of power… but they’re a tiny minority of the corporate world. They get a disproportionate amount of attention because they have a disproportionate amount of power, and are exceptionally egregious in the ways they abuse it. Everybody talks about those people.

I want to talk about some true believers. People who are honestly interested in making your life better. People who are legitimately consumed by a desire to improve the world around them. And this revolves around the concept of the “un-problem.”

The un-problem is typically described in terms of innovation and creativity, but at its core it is a problem you are too stupid to know you have. You may, for example, go through elaborate processes to draw straight lines over long distances… or you can get yourself a chalk line.

It’s perfectly reasonable, if you don’t work in the trades, not to know what a chalk line is. And if you’re a reasonably intelligent person, you may think “well, I can figure out how to draw a perfectly straight line!” and start designing a process or even a tool to draw the line and make sure it stays straight.

But we have already solved this problem. You pull a string through a pile of chalk powder and stretch it across the space where you want the line. You pull the string away from the surface, then let it snap back into place, where it makes a clean and perfectly straight line of chalk that can be easily washed away. We have created a handy little tool for this - a container of chalk dust with a spool of twine in it. There’s a versatile little hook on the end to attach at one end of your desired line.

If you don’t know what a chalk line is, you know you have one problem - you need to draw a very long and very straight line - but in the process of trying to solve that problem, you are just going to give yourself more problems. And all of these problems feel like they’re necessary to solve the main problem. But once you know the chalk line exists, all of those problems disappear. You only had them because you were too stupid to know about chalk lines.

That’s not a value judgment. We were all too stupid to know about chalk lines, at first. And we remained too stupid to know until someone told us.

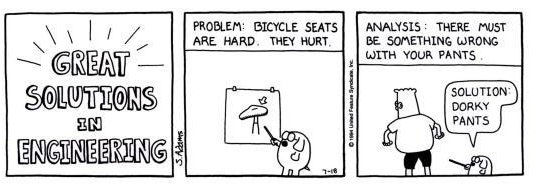

The tech industry is consumed with trying to find problems like this, ideally by creating a brand new product nobody else has, so you will give them money. Scott Adams is a thoroughly repulsive and detestable person, but before he lost his mind he made some fairly astute observations about engineers.

Whether we’re talking about physical products or software, engineers and their companies fundamentally try to solve problems. That’s what they get paid for: to solve people’s problems. And because they’re not stupid, they want to scale that up. When you solve people’s problems for a living, you start to notice most people have the same problems, which have the same solutions. So you package that up into a box and say “if you have this problem, here is the solution.” And then it’s a question of marketing and promotion - people who have the problem need to know the solution exists.

But sometimes the problem isn’t a problem people have.

Consider the AI industry. This does not solve a problem people actually have. The problem engineers (including myself, for a while) were solving over the last forty years has been the Turing test - how do you convince a human observer that your machine is, in fact, a human being?

Well, it’s fairly simple. Make it bad at maths. Force your computer to perform mathematical operations at a glacial pace, working one digit at a time in base ten the way a grammar school student might, and introduce an error every so often. Shift the decimal one place in either direction. Drop a zero. Forget to carry. Raise or lower a digit by 1.

Because a computer would never do these things.

We identify computers primarily through two things we know computers don’t do: they don’t have emotions or personalities, and they don’t make mistakes. If you ask them what 512 times 368 is, they will not say it’s “about” 185,000. They will tell you it’s 188,416. (Hurriedly checks on calculator... yeah, that’s what they’ll tell you.) And they’ll do it really fast — I may have gotten the right answer (lucky!), but it took me several seconds and I almost fucked it up and said 18,816.

Because in the middle of my calculations, I got the decimal point off by one, since 368 times 12 is 4,416 and my brain combined the two 4’s so when I added the 4,416 to the 184,000 I got 188,000 but then mentally skipped the next four because I just did the four. That’s how human beings do maths. We have to double and triple check our results because we suck and get things wrong all the time.

What nobody has ever really stopped to consider is that the Turing test is not a real problem. It is a puzzle posed by Alan Turing to point out that you need to clear this hurdle before he will believe a machine is “intelligent.” And we have been trying to solve that problem because for some reason, we think it is important.

But we don’t actually need Alan Turing to believe our machine is human. We don’t need anyone to believe it is human. You can be perfectly aware the machine is, you know, a machine. That’s fine. Nobody would say the computer in Star Trek might be human; it may have Majel Barrett’s voice, but it has no meaningful personality, no emotions, no opinions. It doesn’t need them. It is unapologetically a computer, and it makes no effort to pretend it is a person.

Lieutenant Commander Data is trying to learn how to be a person, and wants to be a person, but does not under any circumstances try to pretend he is not an android with a positronic brain. You would still say he’s intelligent. You would still say the computer is intelligent.

“I can’t tell it’s a computer” has been obviously unimportant for thirty years. People not only don’t have a problem with knowing it’s a computer, they actively do not want the computer to pretend it’s a person. It’s fucking creepy. Nobody likes it.

Whenever we have used a machine to mass-produce human interactions, people have gotten angry at us. Bulk mailings. Telephone answering machines. Robocalls. Voice menus. Email lists. Customer support chatbots. Nobody likes them. They make literally everyone angry. And it makes them even angrier when you try to fool them into thinking they are interacting with a person, not a machine.

The tech industry is absolutely convinced that there is an un-problem out there which can be solved with a fake person.

And there is! There is the problem of humanoid robots being creepy as fuck. If you put a fake person in it that was not too bright and kind of dorky, it would go a long way toward making people more comfortable. Think Gir from Invader Zim.

But we don’t have humanoid robots yet, although we are working on them… or at least we were; I’m told Elmo’s head of design on that project just quit… and even when we do, it’s unlikely the average person will give a shit. Most of us don’t need a fake person because we don’t need a real person. We haven’t hired a person to help us with anything, why would we buy - or, more likely, lease - an expensive machine to help us?

Part of the path leading there is convincing people to get a fake person without a body to help with things. If we can convince people they need a fake person to help them think and talk and write, it will be fairly easy to convince them their fake person would be even better if it could open doors and get them a beer and have sex with them.

The problem is that most people, where they have a person in their lives, have that person there for reasons that kind of hinge on them being a real person.

This isn’t a problem you can solve with fake people. If you don’t have enough people in your life, filling your life with fake people doesn’t solve the problem. It just covers it up. And we’re already doing this! People will cover their beds with stuffed animals, or their walls with posters, or parasocially fixate on some arbitrary celebrity.

The problem isn’t that the fakes aren’t good enough. The problem is that the fakes aren’t real. We had previously solved this problem with social media, which lets real people find other real people, but there was not enough money in that so we are systematically destroying it with repulsive ads and divisive politics.

The actual un-problem here is that if you stopped being complete fucking freaks shoving your bullshit in everyone’s face all the time, trying to get every last dime they have, they would like you and want to buy your shit.

We’re all living in little bubbles where we don’t know what the fuck we are doing because we don’t challenge a little laundry list of incorrect assumptions. It’s easy to sit out here knowing “everybody wants this” is complete horseshit, but when you are sitting in there you are living in a world where everybody working on the thing is saying “man I want this so much.”

I used to work on automated highway systems. The idea was that while we could use AI to drive cars, it was a Good Idea to set the AI on the sole task of avoiding collisions while the job of navigating was done by reading waypoints embedded in the highway. Essentially, we would use a kind of enhanced GPS system — and this was before GPS was widely used in consumer products — to do the routing and navigation. The only thing the AI did was override the general commands if weird shit happened on the road.

And this was fairly simple. The AI would use a series of sensors designed to identify any solid objects near the vehicle, and if that object moved into the vehicle’s path the AI would reduce speed or alter course to avoid the collision. It wasn’t even a particularly sophisticated AI; by modern standards, you might not even call it AI at all.

Everybody I worked with wanted a car that could drive itself. Literally everybody. The whole team. The teams working on other projects. Everybody thought a self-driving car was so cool. And then one day someone was saying “like, just imagine: you go out and you get in your car and it just goes where you tell it to go, you don’t even have to pay attention.”

And I thought… that’s a taxi. We already have that. It is already a thing.

But then I realised almost every argument for why you don’t take a taxi is an argument for why you don’t want a self-driving car, so selling this to the general public was going to be every bit as hard as selling taxis for primary transportation. “Don’t buy a car, take a taxi” would be an absolutely insane marketing message. Nobody wants this. Nobody is gonna want this.

Which was my “naked emperor” moment. The emperor had no clothes. We were getting investment and doing work and building a project that nobody wanted, that nobody was ever going to want, and literally nobody seemed to care. Everybody was firmly of the belief that because we were working so hard and doing so much, we would all be applauded and rewarded in the end.

That’s what I see going on in AI at the moment. They’re all completely and totally full of shit, but I don’t think the people in the trenches know they’re full of shit. The people at the top probably know; that’s generally my experience, the executives know we’re never going to do what we’re trying to do, but it’s their job to go out and tell people we are. Meanwhile, the people at the bottom are believing every word.

So this is like twice as long as I wanted it to be, and I don’t know that the extra length helped. But the entire purpose of this Substack is just for me to keep writing, so… yay? Mission accomplished? Go, me? Whatever.